Boost Conversions with conversion rate optimization marketing: A Practical Guide

Discover practical strategies for conversion rate optimization marketing to improve B2B funnels and drive growth. Learn proven methods today.

Conversion Rate Optimization, or CRO, is a technical term for a simple idea: getting more website visitors to actually do something. That "something" could be signing up for a demo, buying your product, or whatever action you want them to take.

It’s way more than just experimenting with button colours. Real CRO is a full-funnel discipline. It’s about digging into the entire user experience to turn the traffic you already have into qualified leads and paying customers.

Moving Beyond Basic Landing Page CRO

For years, the B2B playbook was painfully simple: drive traffic to a landing page and gate a whitepaper behind a form. Sure, that model still exists, but let's be honest—it’s dying a slow death.

Today's buyers are smarter. They’re tired of handing over their email for a generic PDF that probably won’t solve their problem anyway.

This is where a modern take on conversion rate optimization marketing completely changes the game. It’s about shifting your focus from a simple lead capture to creating a genuine value exchange. You stop just gating content and start engineering experiences that let users help themselves, solve a tiny piece of their problem, and qualify themselves in the process.

The Shift from Gated Content to Interactive Tools

Think about it. Traditional lead magnets like eBooks and whitepapers are completely passive. A user downloads it, and you just cross your fingers and hope they read it and see how brilliant you are.

Interactive tools, on the other hand, spark an active, two-way conversation.

- Quizzes: A marketing agency could build a "What's Your Digital Marketing Maturity Score?" quiz. The user gets an instant, personalized benchmark. The agency gets a goldmine of data about that prospect's specific challenges.

- Calculators: Imagine a SaaS company offering an ROI calculator. It instantly shows potential customers exactly how much money they could save with the software. This isn't just marketing; it's providing tangible value and framing the product's benefits in cold, hard cash.

- Assessments: A cybersecurity firm could create a "Security Risk Assessment" tool. The user gets a report showing their weak spots, and the firm gets a red-hot lead who has just admitted they have security gaps.

These tools don’t just generate leads; they generate better leads. The data you collect is zero-party data—information willingly handed over by the user that spells out their pain points, goals, and buying intent. If you want to dive deeper into optimizing these first touchpoints, check out our guide on the best practices for landing pages.

Engineering High-Conversion Funnels

Hitting conversion rates in the 40–55% range isn't a fantasy. It's entirely possible, but it demands a total shift in how you think. Stop trying to persuade and start trying to serve. This playbook is designed to show you exactly how to build these high-performance funnels.

The core principle is simple: stop making users fill out a form to get value. Instead, let them receive value by filling out the form. The form itself becomes the tool, the experience, and the solution.

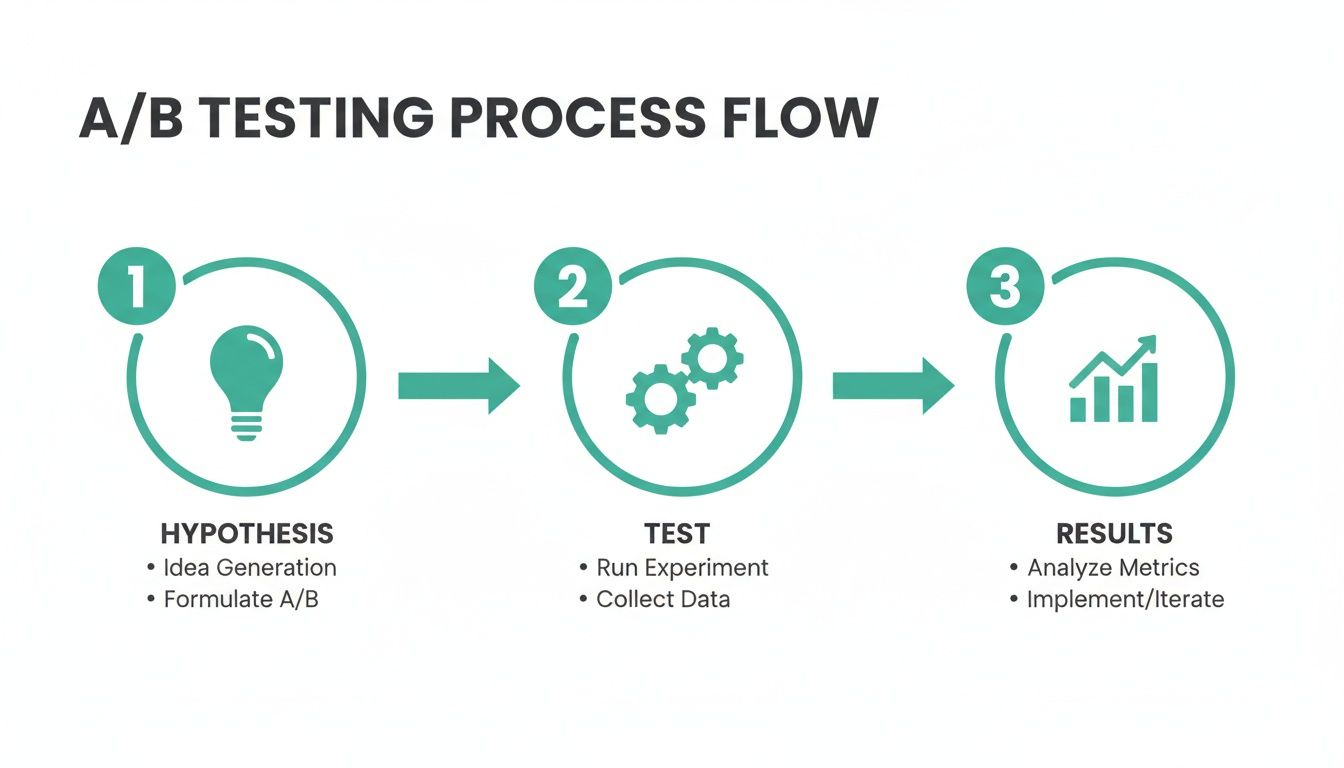

We’re going to move from abstract ideas to a practical, step-by-step framework. You'll learn how to instrument your funnel, come up with data-backed hypotheses, design tests that actually matter, and build interactive lead generation patterns that put the user first. This approach ensures every single interaction is a chance to deliver value, build trust, and ultimately, drive conversions.

Building a Foundation for High-Impact Experiments

Meaningful conversion rate optimization isn't about throwing random ideas at a wall and hoping something sticks. It's a systematic process that starts long before you ever launch an A/B test. The real work is in building a foundation—a deep, data-driven understanding of what your users are actually doing on your site.

Guesswork is the enemy of high-impact CRO.

You might think your demo request form is too long. You might have a hunch that a CTA button is in the wrong place. But without data, those are just opinions. To get from a vague assumption to a testable hypothesis, you have to become an expert observer of the user journey.

This means properly instrumenting your marketing funnel to see what's really going on. You need tools that go beyond basic analytics and show you the why behind the numbers.

Uncovering Friction with Behavioural Analytics

Let's start with a common scenario. Your analytics show a huge drop-off rate on your demo request page. That’s the what. To find the why, you need to see the page through your users' eyes. This is exactly where behavioral analytics tools come in.

- Heat maps show you where people are clicking, how they're moving their mouse, and how far they scroll. You might discover they're hammering a non-clickable element out of frustration, or that most visitors never even scroll down to see your main call-to-action.

- Session recordings are like watching a DVR of a user's entire visit. You can see them rage-clicking a broken button, hesitating over a confusing form field, or getting completely lost in your navigation. These recordings give you undeniable proof of user friction.

When you combine these tools, you can pinpoint the exact moments of frustration. This evidence is the raw material for building a strong, data-backed hypothesis that actually has a fighting chance of improving performance.

From Observation to a Testable Hypothesis

A well-formed hypothesis is the cornerstone of any experiment. It’s not a question; it's a clear, testable statement that spells out a proposed change, a predicted outcome, and the reason you think it will work.

The structure is simple: "If we [implement this change], then [this metric] will increase because [this reason]."

Real-World Scenario: Fixing a Demo Request Page

Imagine you’ve been watching session recordings of your demo page. You spot a pattern: lots of users start filling out the form, but bail the second they hit the "Phone Number" field. Your heat map confirms it—the drop-off rate spikes right at that exact point.

Your observation just became a data-backed insight. Now you can build a solid hypothesis:

If we remove the mandatory 'Phone Number' field from our demo request form, then form submission rates will increase because we are reducing user friction and the perceived privacy invasion at a critical point in the funnel.

This statement is specific, measurable, and directly tackles the problem you saw. Now you have a clear foundation for an A/B test: Version A (with the phone field) versus Version B (without it). This systematic approach ensures your conversion rate optimisation marketing efforts are focused and impactful, not just shots in the dark.

The data you gather from these early investigations can also light the way for bigger strategic decisions. For instance, running a full audit of your existing assets can reveal user friction patterns across your entire site. To see what this looks like in practice, you can check out some detailed content audit examples that show how to turn user behaviour into actionable improvements.

This dedication to data-driven UX is what separates the winners from the losers in competitive markets. Successful agencies routinely use heat maps, session recordings, and A/B testing to push conversion rates sky-high, turning traffic into qualified leads at scale. You can find more insights into how CRO agencies achieve this on Varify.io. By adopting a similar, evidence-based approach, you can make sure every experiment you run is designed for maximum impact.

How to Design and Run CRO Tests That Actually Work

Alright, you've done the homework and have a solid, data-backed hypothesis. Now for the fun part: running the experiment. This is where we stop guessing and start proving what actually moves the needle. The goal isn't just to launch a bunch of tests; it's to run the right tests, meticulously, so the data you get back is clean, reliable, and tells you exactly what to do next.

Success here is all about discipline. You need to pick the right tool for the job—A/B or multivariate—and get the non-negotiables of experimental design right. I'm talking about things like statistical significance and sample size. If you mess this up, your results are worthless, and you might as well have just flipped a coin.

A/B vs. Multivariate: Picking the Right Fight

The two heavyweights in CRO testing are A/B tests and multivariate tests. They look similar but solve very different problems. Knowing when to use each is critical.

A/B Testing (or Split Testing) is your bread and butter. It's a straight-up duel between two versions of a page: Version A (the original, your "control") versus Version B (the new challenger with one specific change, the "variant"). You split your traffic down the middle and see which version gets you closer to your goal.

A/B testing is your go-to for:

- Big, bold swings: Think redesigning an entire landing page, overhauling your value proposition, or swapping a boring static form for an interactive calculator.

- Clear, fast answers: Because you're only changing one thing, the cause-and-effect is crystal clear. It’s perfect for sites with moderate traffic that can't afford to wait months for an answer.

- Validating a focused hypothesis: If your theory is "getting rid of the phone number field will boost form fills," an A/B test is the cleanest way to prove it.

Multivariate Testing (MVT) is a different beast entirely. It's more complex. Instead of testing one major change, MVT lets you test multiple, smaller changes across several elements on a page at the same time. You could test two headlines, three button colours, and two hero images all at once to find the absolute winning combination.

MVT is best for:

- Fine-tuning high-performers: Use it when you're trying to squeeze extra performance out of an already-good page by seeing how minor tweaks interact.

- High-traffic environments only: MVT needs a ton of traffic to reach statistical significance for every possible combination. Don't even think about it otherwise.

For most B2B marketers, the answer is simple: start with A/B testing. It gives you clean, actionable insights without needing the traffic of a Fortune 500 company.

A/B testing is a foundational method for data-driven CRO, allowing you to compare different versions of a page to see which performs better. For practical guidance on how to structure these experiments effectively, especially with paid traffic, there are great resources on how to properly A/B test landing pages.

The Non-Negotiables of Experimental Design

Let me be blunt: running a sloppy test is worse than running no test at all. It gives you false confidence to make bad decisions. Before you launch anything, you absolutely must lock down two things.

First, your sample size. This is the number of people who need to see each version of your page to get a reliable result. Running a test for just a day or on a few dozen visitors is a waste of time—you're just measuring random noise. Use a sample size calculator to figure out how many visitors you need, based on your current conversion rate and the minimum lift you'd consider a win.

Second, you have to run the test until it hits statistical significance. The industry standard is 95% confidence. This means you can be 95% sure the results aren't just a fluke. Calling a test early because one version is ahead is the single most common mistake I see. It’s a recipe for false positives. Let the test run until your tool tells you it's hit significance. No shortcuts.

A Real-World B2B Testing Scenario

Let's make this real. Imagine you want to improve a pricing page. Right now, it has a standard, boring "Contact Sales" form. Your hypothesis is: "If we replace the static contact form with an interactive ROI calculator, then demo requests will increase because we are providing immediate, personalized value instead of creating a barrier."

Here’s how you’d set that up:

- Test Type: A/B Test. Nice and simple.

- Control (Version A): The current pricing page with the "Contact Us" form.

- Variant (Version B): A new page where the form is replaced by an ROI calculator. It asks a few questions, spits out a personalized ROI estimate, and then asks for their contact info.

- Primary KPI: Demo requests submitted. That's the only number we care about for this test.

- Audience Segmentation: Run this test only on new visitors from organic search and paid campaigns to keep the audience clean and consistent.

You run this test until you hit your calculated sample size and achieve statistical significance. Then, and only then, you'll have a clear, data-driven answer. You'll know for a fact whether the interactive tool crushes the static form, giving you a powerful insight to scale across your entire conversion rate optimisation marketing strategy.

Engineering High-Conversion Interactive Experiences

Let's be honest, static forms and gated whitepapers are dinosaurs. They’re relics from a marketing era that died a few years ago. They create a ton of friction, demand a user's data upfront, and in return, offer some delayed, usually generic, PDF. It's a broken model.

Today, smart conversion rate optimisation marketing flips that entire script. Think "engineering as marketing." You create interactive experiences—calculators, quizzes, assessments—that deliver real, immediate value while the user is converting.

Instead of asking someone to fill out a boring form to get something useful, the form is the useful thing. This is a huge shift. We're moving from passive content consumption to active participation, and it's why these interactive tools are hitting conversion rates that static lead magnets can only dream of.

When someone uses a well-designed calculator on your site, they're not just reluctantly handing over their data. They're actively solving a small part of their problem, right then and there. They get an instant benchmark, a personalized tip, or a clear answer. That value exchange feels fair and genuinely helpful, which is why they're so much more likely to see it through to the end.

This whole process is a continuous loop of testing and improving. You form a hypothesis, run the experiment, analyze what happened, and then refine it. It's not a one-and-done deal.

The key takeaway here is that improvement isn’t a single action; it's a systematic process. Each interactive element gets sharper and more effective based on real user behaviour.

100% Free Lead Magnet Audit

Our AI analyzes your website and delivers custom growth strategies in seconds.

Blueprints for High-Engagement Interactive Tools

Building an interactive tool that actually converts is about more than just asking good questions. You have to be obsessive about the user experience, guiding them on a smooth journey from the first click to the final result. The name of the game is maintaining momentum and keeping their cognitive load as low as possible.

Here are a few core principles I stick to:

- One Question at a Time: Nobody wants to face a wall of form fields. It's intimidating. Presenting one simple, clear question per screen keeps the user focused and makes the whole thing feel fast and easy.

- Visual Progress Indicators: A simple progress bar is a surprisingly powerful psychological nudge. It shows users how far they've come and, more importantly, how close they are to getting their answer. It's a massive encouragement to finish.

- Immediate Feedback and Value: The best experiences feel like a conversation. Use conditional logic to ask smart follow-up questions based on their last answer. This makes the journey feel personal and intelligent, not like a static survey.

The real magic of interactive content is its ability to create a "curiosity gap." With every question a user answers, their anticipation for the final result builds. This makes it incredibly difficult for them to bail halfway through.

Imagine a B2B SaaS company creates a "Cloud Cost Optimization Calculator." It wouldn't just be a spreadsheet with input fields. It would walk the user through a few simple, multiple-choice questions about their current setup. With each click, the user gets closer to a tangible, valuable outcome: a personalized report showing them exactly how much they could save. That desire to see the final number is what drives ridiculously high completion rates.

For more ideas on weaving this into your site, check out the core concepts behind adding interactivity on websites.

Structuring a Multi-Step Quiz with Conditional Logic

Let's get practical. Here’s how this works for a marketing agency trying to generate qualified leads. The goal is to let businesses self-assess their own marketing maturity.

The User Journey:

- The Hook (Question 1): Start broad and relatable. "What's your single biggest marketing challenge right now?" Give them clear options like "Generating More Leads," "Proving ROI," or "Building Brand Awareness."

- Conditional Branching (Question 2): Now, get personal. If they chose "Generating More Leads," your next question shouldn't be generic. It should be, "Which channels are you currently using for lead gen?" This immediate personalization proves you're actually listening.

- Digging Deeper (Questions 3-5): Ask a few more targeted questions related to their initial challenge. This is where you gather crucial zero-party data about their specific needs, maybe even their budget or team size.

- The Gate (Lead Capture): After you've delivered this engaging, personalized journey, you make your ask: "Enter your email, and we'll send you the full 'Personalized Marketing Maturity Score & Report'." By now, the user is highly invested. The exchange feels completely fair.

- The Payoff (Results Page): Don't make them wait. Instantly show them their score and a high-level summary on the results page. The detailed report hits their inbox, kicking off a nurture sequence that's perfectly tailored to the exact problems they just told you they have.

This entire flow is engineered to build trust and provide value before you ask for their contact info.

You're not just capturing another lead; you're starting a highly relevant conversation with a potential customer who has already told you exactly how you can help them.

Using Zero-Party Data for Lead Scoring and Nurturing

Getting the lead is just the start of the game. The real magic of interactive tools isn't the conversion itself, but what you do afterwards. You're sitting on a goldmine of zero-party data—information people have willingly handed over. This is your key to a much smarter, more effective marketing and sales engine.

Forget those old-school lead scoring models that guess a lead's value based on flimsy proxies like job titles or company size. Now you can build your system on actual, stated intent. This is a massive leap forward in conversion rate optimisation marketing because it finally closes the gap between marketing activity and genuine sales readiness.

From Answers to Actionable Segments

The answers someone gives you in a quiz or calculator aren't just data points. They're direct signals telling you about their biggest challenges, their goals, and how urgently they need a fix. Your first job is to turn these signals into a lead scoring framework that makes sense.

This means assigning points to specific answers. Some answers scream "hot lead," while others whisper "just browsing."

Let's say you're using a "Marketing Maturity Assessment." If someone says they have a dedicated budget and a team of five, boom—that’s +20 points. But if another user admits they're a solo operator with zero budget, that might be -10 points.

This simple scoring system lets you automatically sort every single lead into a handful of useful buckets:

- High Intent / Sales-Ready: These folks have the exact problems you solve and fit your ideal customer profile to a T. Their score is high, and they need to go straight to sales for an immediate, personal follow-up.

- Research Phase / Nurture: They're interested, but maybe not ready to buy today. They could be exploring options or working with a longer timeline. They get a mid-range score and drop into a targeted email nurture sequence.

- Low Fit / Disqualified: These leads are clearly not a match (think students, competitors, or tire-kickers). A low score keeps your pipeline clean by either disqualifying them or adding them to a general newsletter.

The beauty of this is that you're segmenting people based on the problems they told you they have. Sales stops flying blind, and marketing stops spraying generic messages at everyone. Every single follow-up becomes hyper-relevant.

Building a Nurture Flow That Continues the Conversation

Once your leads are segmented, you can build out personalized email flows for each bucket. The goal here isn't to just blast them with a generic welcome sequence. It's to continue the conversation you already started.

Sticking with our "Marketing Maturity Assessment" example, let's look at the 'Research Phase' segment.

Imagine a lead finishes the quiz and reveals their main challenge is "Proving ROI." They get a mid-range score and are automatically dropped into this flow:

- Email 1 (Immediate): Delivers their full assessment report. The subject line is personalized: "Your Marketing Maturity Score & Tips for Proving ROI." The email gives them a quick summary and links to a case study about a similar company that cracked its ROI problem.

- Email 2 (2 Days Later): Hits their pain point directly. Maybe it's a blog post on "5 Common Mistakes Marketers Make When Tracking ROI" or a short video showing how to set up better analytics dashboards.

- Email 3 (4 Days Later): Offers another high-value resource, like a downloadable template for a marketing ROI report. You're building trust by solving their problem, not just pitching your product.

- Email 4 (7 Days Later): A softer call to action. This could be an invite to a webinar on "Data-Driven Marketing" or a simple check-in from a "marketing advisor" to see if they have any questions.

This turns your follow-up from a monologue into a genuine dialogue, which dramatically boosts lead-to-opportunity rates.

The data backs this up. In some e-commerce sectors, for example, advanced CRO adoption is pushing conversion rates to an impressive 3.5%, way above the global average. This success comes from personalized experiences that can lift conversions by 20-320%—a playbook B2B marketers can easily copy with intent-driven funnels. You can dig into these stats over at Digital Web Solutions.

To make this even clearer, here's a simple model showing how you might score and segment leads from an interactive tool.

Example B2B Lead Scoring And Segmentation Model

This table shows how you can translate answers from an assessment directly into lead scores and automated actions, connecting data to your sales and marketing follow-up.

| Lead Segment | Defining Criteria (Example Answers) | Lead Score Range | Automated Follow-Up Action |

|---|---|---|---|

| High Intent | "Budget > $50k," "Team Size > 10," "Timeline < 3 months" | 75–100 | Immediate hand-off to sales with a personalized alert |

| Research Phase | "Budget < $50k," "Team Size 2–9," "Timeline 3–6 months" | 40–74 | Enters a 4-part educational email nurture sequence |

| Low Fit | "No budget," "Just browsing," "Student" | 0–39 | Added to the monthly newsletter for long-term engagement |

This systematic approach makes sure your team’s time and resources are laser-focused on the leads that matter most. Your interactive tools stop being just lead magnets and become a predictable engine for growing your pipeline.

How to Measure and Scale Your CRO Programme

A winning conversion rate optimisation marketing programme isn't about getting a few lucky wins. It's about building a predictable system that keeps getting better and fuels your pipeline. To pull this off, you have to look past the simple conversion rate and start tracking the metrics that actually show business impact.

The goal is to stop generating more leads and start generating better leads, faster. This means you need a smarter set of key performance indicators (KPIs) that connect what you do in marketing directly to what happens in sales.

Moving Beyond Surface-Level Metrics

Look, the raw conversion rate is a decent place to start, but it doesn't tell you the whole story. A high conversion rate is totally useless if the leads are junk and sales won't touch them. To really know if your CRO programme is working, you need to track what happens after the conversion.

- Lead Quality Score: This grades leads based on how good a fit they are and how interested they seem, often using the data you collect from your interactive tools. If the average score from a test variant goes up, you know your changes are attracting better prospects.

- Cost Per Qualified Lead (CPQL): This is where the rubber meets the road. It tells you exactly how much it costs to generate a lead that sales actually accepts. It's the clearest view you'll get of your CRO programme's financial efficiency.

- Sales Cycle Length: This one is powerful but so many people ignore it. If leads from your new interactive funnel go from first touch to closed-won faster, that's a huge sign you're sending higher-quality, better-educated prospects over to the sales team.

And when you're looking at your interactive elements, it's also critical to understand the direct impact of live chat on conversion rates, since it gives you another layer of engagement data to work with.

Creating a Prioritised CRO Roadmap

You'll never run out of ideas for tests. The trick is having a simple way to decide what to do next so you don't waste time on tiny tweaks that won't move the needle. A method that's popular for a reason is the ICE scoring model.

ICE is just an acronym for:

- Impact: How big of a deal will this be for our main KPI if it works? (Score 1-10)

- Confidence: How sure are we this will actually work, based on data or past tests? (Score 1-10)

- Ease: How simple is this to actually build and launch, technically and operationally? (Score 1-10)

Just multiply the scores (Impact x Confidence x Ease) to get a final ICE score for every test idea. The ones with the highest scores jump to the top of your list. Suddenly, you have a backlog driven by data, not just a list of random suggestions from the team.

A CRO programme dies in a silo. Its insights are only valuable when shared, discussed, and acted upon by both marketing and sales. Regular communication is non-negotiable for turning test results into revenue.

Establishing a Cadence for Growth

Finally, you have to operationalize the whole thing. Set a consistent rhythm for reviews and communication. This is how CRO stops being a bunch of random projects and becomes a core part of how your business grows.

Set up bi-weekly or monthly meetings to go over recent test results and share what you've learned. Make sure you invite people from both marketing and sales so everyone is on the same page about what’s working and why.

This feedback loop is what turns a good CRO programme into a great one. It creates a predictable engine for growth that you can actually rely on.